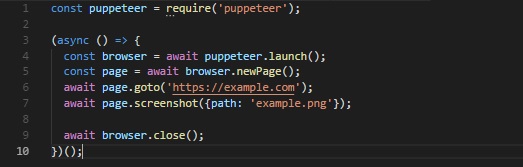

However, it sometimes ends up capturing empty bodies, especially when the websites are built on such modern frontend frameworks as AngularJS, React and Vue.js. Scraping is predominantly used to build large datasets for data analytics.Distributed crawler powered by Headless ChromeĬrawlers based on simple requests to HTML files are generally fast. But no real scraping project finishes after scraping one page. So far you've learned how to start a browser with Puppeteer, and how to control its actions with some of Puppeteer’s most useful functions: page.click() to emulate mouse clicks, page.waitForSelector() to wait for elements to render on the page, page.waitForFunction() to wait for actions in the browser and page.$$eval() to extract data from a browser page. repositories.`) Īwait new Promise(r => setTimeout(r, 10000))

Learn more about ECMAScript modules in Node.js. If you don't do this, Node.js will throw Synta圎rror: Cannot use import statement outside a module when you run your code. This will enable use of modern JavaScript syntax. The first time you install Puppeteer, it will download browser binaries, so the installation may take a bit longer.Ĭomplete the installation by adding "type": "module" into the package.json file. mkdir puppeteer-scraper & cd puppeteer-scraper Now that we know our environment checks out, let’s create a new project and install Puppeteer. Related ➡️ How to install Node.js properly If you’re missing either Node.js or NPM or have unsupported versions, visit the installation tutorial to get started. To get the most out of this tutorial, you need Node.js version 16 or higher. You can confirm their existence on your machine by running: node -v & npm -v We’ll use NPM, which comes preinstalled with Node.js. To use Puppeteer you’ll need Node.js and a package manager. We will use Puppeteer to start a browser, open the GitHub topic page, click the Load more button to display more repositories, and then extract the following information: You’ll be able to select a topic and the scraper will return information about repositories tagged with this topic. To showcase the basics of Puppeteer, we will create a simple scraper that extracts data about GitHub Topics. You don’t need to be familiar with Puppeteer or web scraping to enjoy this tutorial, but knowledge of HTML, CSS, and JavaScript is expected. This makes Puppeteer a really powerful tool for web scraping, but also for automating complex workflows on the web. With Puppeteer, you can use (headless) Chromium or Chrome to open websites, fill forms, click buttons, extract data and generally perform any action that a human could when using a computer.

0 kommentar(er)

0 kommentar(er)